By: Rahul Raj

UseCase

One of the interesting problems in Data Platform space is how to bulk transfer Petabytes of data correctly from On-prem systems to Cloud at a very low cost. Different organizations go for different solutions, ranging from open-source tools to enterprise-grade tools. In this article, we would mainly focus on open-source solutions and how we can leverage these solutions to build an in-house product.

Apache Nifi as Data Transfer Solution

Apache Nifi is an easy-to-use and powerful system to distribute the data from multiple sources to multiple destinations. It was built to automate the flow of data between various systems. Following are the core concepts of Nifi:

- FlowFile: A FlowFile represents each object moving through the system, and for each one, NiFi keeps track of a map of key/value pair attribute strings and its associated content of zero or more bytes.

- FlowFile Processor: Processors perform the work.

- Connection: Connections provide the actual linkage between processors.

- Flow Controller: The Flow Controller maintains the knowledge of how processes connect and manages the threads and allocations thereof which all processes use.

- Process Group: A Process Group is a specific set of processes and their connections, which can receive data via input ports and send data out via output ports.

Advantages of Using Nifi

- Open Source

- Provides facility for bulk Data Transfer and Delta Copy

- Provides CRC Data Validation Facility

- Provides API for doing things programmatically

- Supports major Monitoring, Alerting and Logging Tools

- Deployment on Distributed Architectures

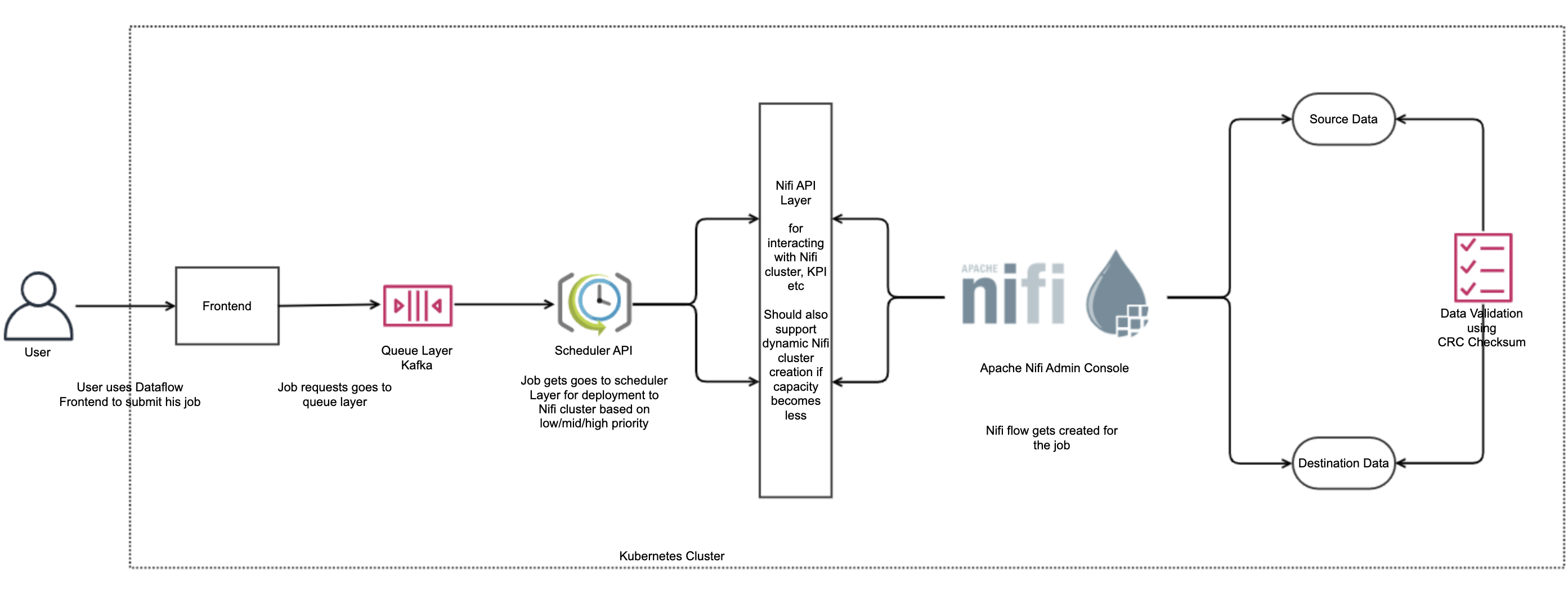

Product Architecture Design

The end-to-end architecture is quite straightforward and utilizes the concept of queueing and the job-scheduling.

- Frontend: Frontend refers to the web UI where user can come in and submit their data copy.

- Queue & Scheduler Layer: The Queue & scheduler layer can be built using Kafka & Scheduler API to prioritize job requests from users based on the high/medium/low priority.

- Nifi API Layer: Nifi APIs can be called using any of the programming languages such as Java, Python, or Go. Using API, the requests can be submitted to the Nifi cluster for creating required flows and processor groups.

- Data Validation: After the data copy is completed by the Nifi cluster, data validation between source and destination can be achieved by CRC checksum. Nifi also has a built-in CRC checksum validator, which can be used for the purpose.

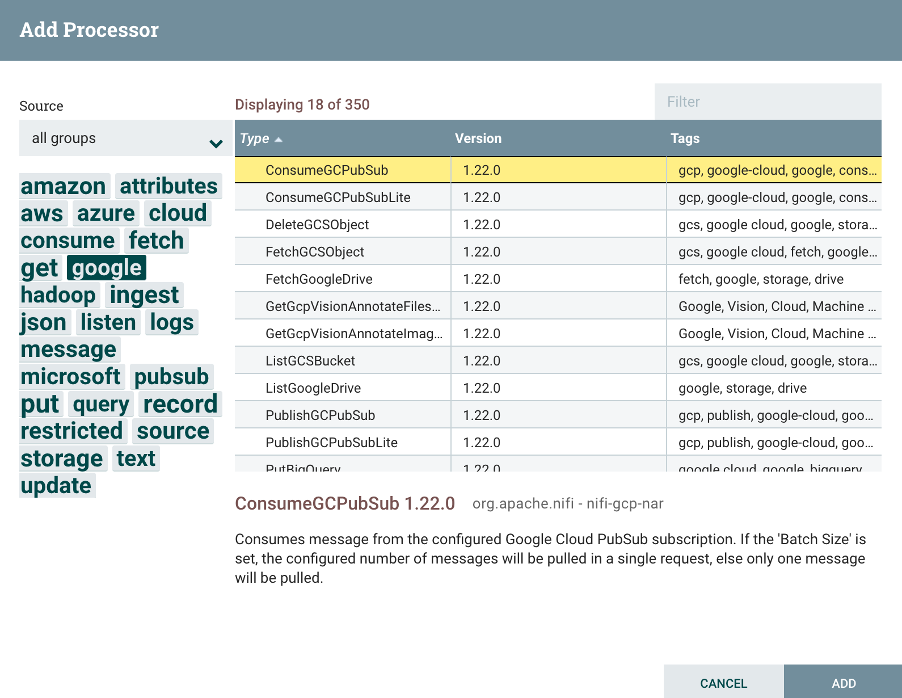

Nifi Processors

Nifi supports almost all major cloud providers, databases, queueing tools, and data warehouses:

Conclusion

From our experience, Nifi has proved to be a cost-effective bulk data copy solution. We have managed to transfer several petabytes of data using it within a couple of days from one source to destination. It may be noted that Nifi as a tool is entirely dependent on disk space for data copy, which means if source data size is around 1TB, then Nifi would also need at least 1 TB if replication is chosen as 1.

I hope people find this blog useful and helpful in their work.

Thank you.

References

https://nifi.apache.org/documentation/v2