By: Rahul Raj

In today’s Data world, the current landscape can be divided into two parts: Raw Data and Analytical Data. Raw Data is stored in storage clusters, databases, disk units behind business capabilities, while Analytical Data is used for required transformations, deriving insights, and preparing business reports. The Analytical Data space can be further divided into two technology stacks: Data Lake/lakehouse and Data warehouse. Data Lake/lakehouse supports analytical use-cases, whereas Data warehouse supports business intelligence reports.

The concept of Data mesh explores the challenges of existing analytical data space architectures. The Data mesh objective is to create a foundation for extracting value from analytical data and historical facts at scale. This scale applies to the constant change of the data landscape, proliferation of both sources of data and consumers, diversity of transformation and processing that use cases require, and speed of response to change. To achieve this objective, there are four underpinning principles that any Data mesh implementation embodies to fulfil the promise of scale while delivering the quality and integrity guarantees needed to make data usable:

- Domain Ownership

- Data as a Product

- Self-serve Data Infrastructure

- Federated data Governance

Domain Ownership

Data mesh at its core is founded on decentralization and distribution of responsibility to people who are closest to the data in a domain-centric environment, based on business domains. This enables support for continuous change and scalability. Creating decentralization requires creating an architecture that organizes analytical data by domains.

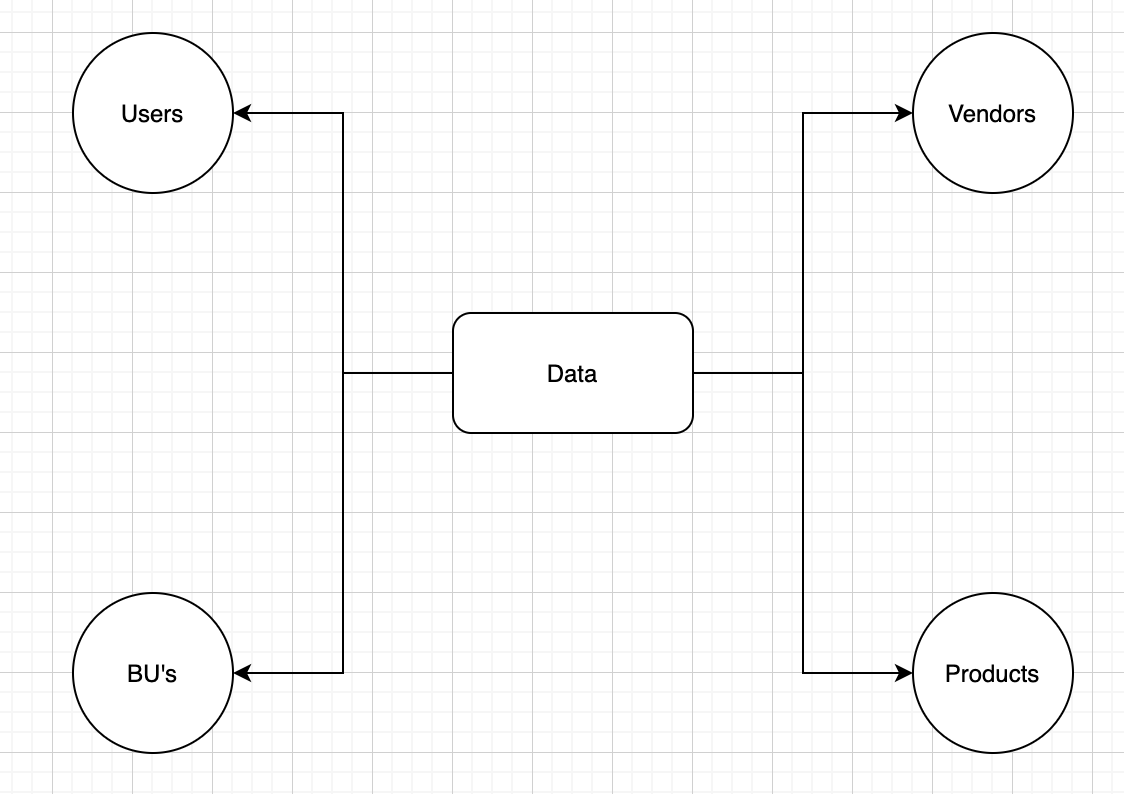

The following example demonstrates the principle of domain-oriented data ownership.

Naturally, each domain will have dependencies on other domains.

Data as a Product

Data as a product principle is designed to address the data quality and age-old data silos problem, or as Gartner calls it, dark data - “the information assets organizations collect, process and store during regular business activities, but generally fail to use for other purposes”. Analytical data provided by the domains must be treated as a product, and the consumers of that data should be treated as customers.

Data Product encapsulates 3 structural components:

-

Code: Code can have 3 functionalities:

Code for data pipeline responsible for extract, transform and load.

- Code for data APIs to provide access to data

- Code for enforcing traits for access control

-

Data & Metadata: Depending on the nature of the domain data and its consumption models, data can be served as events, batch files, relational tables, graphs, etc., while maintaining the same semantic. For data to be usable, there is an associated set of metadata, including computational data documentation, semantic and syntax declaration, quality metrics, etc.; metadata that is intrinsic to the data e.g., its semantic definition, and metadata that communicates the traits used by computational governance to implement the expected behavior e.g., access control policies.

- Infrastructure: Infra helps in building, deployment and running of codes & pipelines.

Self-serve Data Infrastructure

As we know, there is infrastructure requirements for all Data products. Manually provisioning the infrastructure every time can be not only tiresome but also wasteful in infra provisioning. This calls for the need for self-serve Data platform which can be used in an automated manner. This platform is essentially parallel to production environments but takes care of Data Analytics and engineering use-cases. Most of the organizations tend to use Data Lake and Lakehouse architectures to meet these requirements. The list of features which the platform should be able to support would be essentially: scalable polyglot data storage, data products schema, data pipeline declaration and orchestration, data products lineage, compute, and data locality, etc.

Federated Data Governance

Data mesh allows different teams in different domains to develop individual data products in a decentralized, independent and domain specific manner. This facilitates the need for Federated Data governance as there would be decision-making on domain-specific data needs and global decisions as well which would apply rules for all data products horizontally. This would also mean application of several rules on who can cross the domains and who needs to operate inside the domains.

Conclusion

Overall, the principles of Data mesh bring Operational and Analytical Data closer to each other, while maintaining the distinct relationship between the two and utilizing the common infrastructure stack to achieve the similar aspirational needs of analytical data requirements.