By: Suyash Shailesh Ghadge

Introduction:

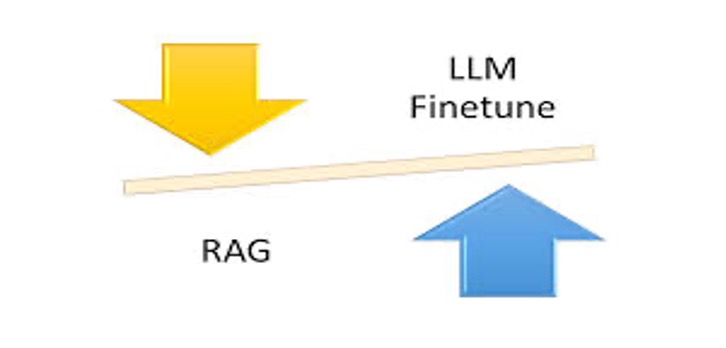

In the world of language models, making the right choice between retrieval- augmented generation (RAG) and fine-tuning is crucial for achieving optimal performance.

Objective:

The goal here is to help you understand when to use RAG versus fine-tuning with language models (LLMs), considering factors like model size and specific use cases.

Background:

Language models (LLMs) are AI systems trained on vast amounts of text data to perform tasks like generating text or answering questions. Retrieval-augmented generation (RAG) enhances LLMs by adding relevant knowledge from databases before generating text, making them more accurate and versatile.

Body:

- RAG Advantages Over Fine-Tuning:

- RAG doesn't modify the original LLM, retaining its full capabilities.

- It can use external knowledge sources without retraining, which is cost-effective.

- Changes in knowledge sources are easier with RAG compared to fine-tuning

- When to Fine-Tune vs. Use RAG for Different Model Sizes:

- Large Models: RAG is preferred for large models like GPT-4 to maintain their broad capabilities.

- Medium Models: Both RAG and fine-tuning are options, depending on the need for general knowledge retention.

- Small Models: Fine-tuning is often better for smaller models since they lack extensive pre-trained capabilities.

- Using RAG and Fine-Tuning for Pre-Trained Models:

- RAG for General Tasks: Effective for leveraging general knowledge without risking forgetting.

- Fine-Tuning for Specific Tasks: Good for tasks requiring specialized knowledge and memorization.

- Financial Services Examples for RAG and Fine-Tuning:

- Investment Management: RAG can personalize advice with client profiles; fine-tuning risks losing general conversational abilities.

- Insurance Claim Processing: Fine-tuning suits tasks involving static policies and document analysis.

- Customer Service Chatbots: A combination approach may work best, using RAG for general chat and fine-tuning for company-specific knowledge.

- Key Practical Considerations:

- Access to LLMs: RAG needs access to large models; building smaller models in-house is easier.

- Data Availability: Fine-tuning requires specific datasets; RAG relies on external knowledge.

- Knowledge Flexibility: RAG allows for easy updates without retraining; fine-tuning needs periodic retraining.

Conclusion:

In the world of language models, the choice between retrieval-augmented generation (RAG) and fine-tuning depends on factors such as model size and specific task requirements. By carefully considering these factors, organizations can optimize their AI systems for various applications in finance and beyond.

It's essential to recognize that there is no one-size-fits-all solution. Instead, leveraging both RAG and fine-tuning techniques together can enable language models to realize their full potential. This approach allows models to maintain their general conversational skills while effectively incorporating specific knowledge.

As technology advances and AI continues to improve, understanding the distinctions between RAG and fine-tuning becomes increasingly critical. By implementing the advice provided in this guide, companies can tailor AI solutions to meet their unique needs and remain at the forefront of the evolving technology landscape.

References:

https://arxiv.org/abs/2401.08406

https://medium.com/@bijit211987/when-to-apply-rag-vs-fine-tuning-90a34e7d6d25