By: Anirban Nandi

As per the International Data Corporation’s estimates, the global data volume would reach an astounding 175 zettabytes by 2025. To help you understand the magnitude a little better, it is 175, followed by 21 zeros! Not all this data is useful and extracting business insights from huge swathes of data is akin to looking for a needle in a haystack.

Managing large data workloads is a complex challenge. It involves data collection methods, highly specific big data tools, selection of appropriate tools for your application, complying with local and international guidelines, performance bottlenecks that emerge from hardware and software limitations, and other security concerns.

That said, the picture is not all gloomy. Companies have been speeding up their innovation and development processes to gain maximum advantage from their analytics tools and platforms. However, having the most innovative product at hand, the key lies in scaling up as the company and data grow. The failure to grow your infrastructure with the increasing data causes some major bottlenecks in the big data and analytics workloads. Migrating to different infrastructures is an alternative. However, this is a complicated and time-consuming process that may lead to significant downtime and costs. Companies must thus invest in selecting the best infrastructure that would scale up as the data grows.

Focus on scalability

While scaling is difficult, it is necessary for the growth of a data-driven company. Companies should implement scalability when the performance issues snowball and start impacting the workflow, efficiency, and customer retention. The most common performance bottlenecks include high CPU usage, low memory, high disk I/O, and high disk usage.

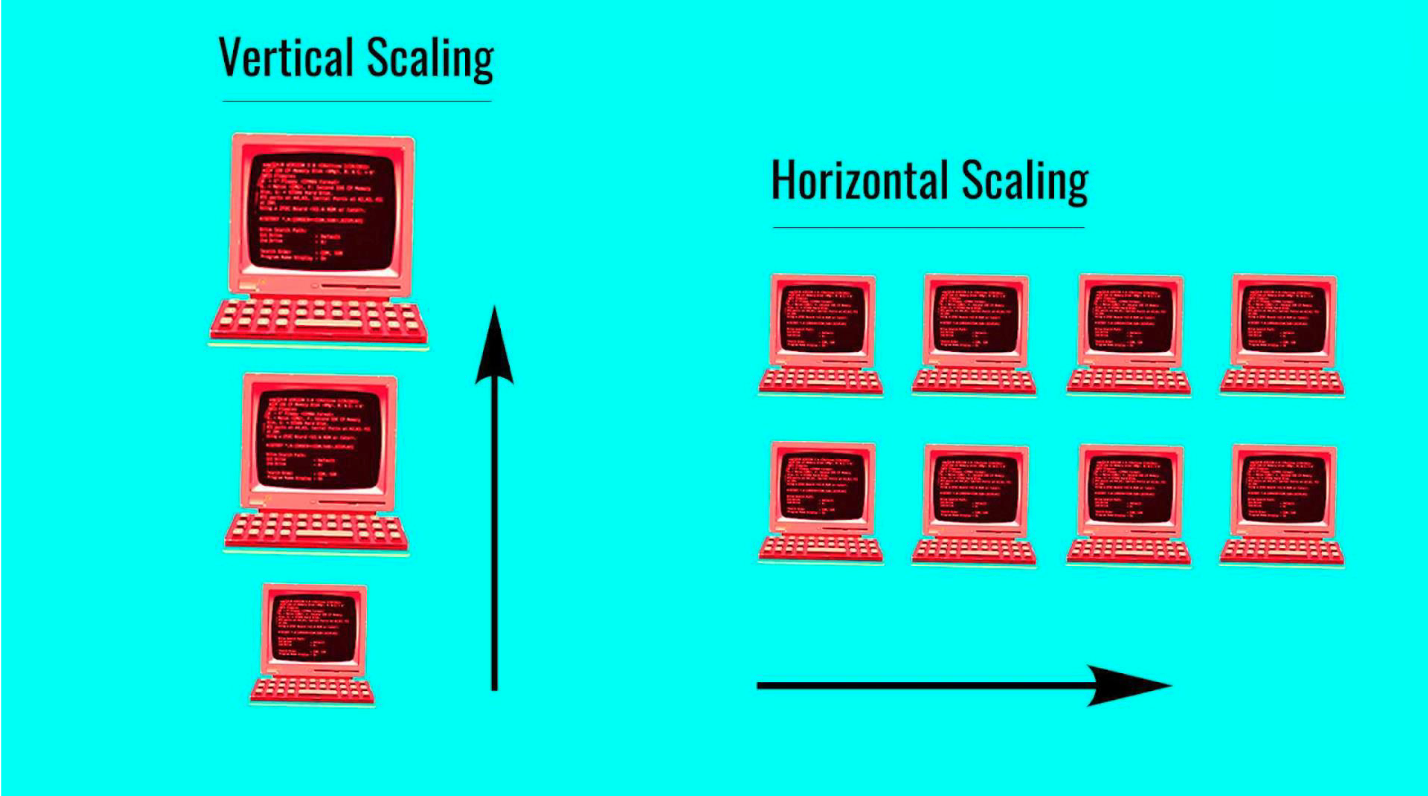

There are two common ways to scale your data analytics solution:

Vertical scaling up involves changing the server with a faster one that has more powerful resources like processor and memory. It is generally used in the case of the cloud as it is a relatively difficult task to scale dedicated servers. Alternatively, bare metal servers are a type of dedicated servers with additional features that offer the possibility of scaling up and down from a single UI platform while ensuring minimal downtime.

Another method is scaling out or the horizontal type of scaling. It basically refers to using more servers for parallel computing and is considered most suited for real-time analytics projects as it allows companies to design a proper infrastructure from the ground up and add more servers going forward. Horizontal scaling tends to recur lower costs in the longer run.

These scaling methods have different advantages. For example, horizontal scaling allows combining the power of several machines into one, thereby improving performance. Horizontal scaling also offers built-in redundancy and ensures cost optimization. Vertical scaling, on the other hand, maximizes existing hardware, manages resources upgradation in a better way, and lowers energy costs.

All about XOps

There are four main components of scalability – processes, automation, people, and leadership. Processes and automation come under the broader umbrella of the overall workflow. Such workflows are often governed by a framework or set of guidelines that cover the end-to-end process. Enter, XOps. The term XOps has been gaining popularity and acceptance across industries and companies. Earlier this year, it also made it to Gartner’s Top Ten Data and Analytics Trends for 2021.

XOps can be considered as the natural evolution of DataOps in the workplace that enables the AI and machine learning workflow. XOps aims to include DataOps, MLOps, PlatformOps, and ModelOps to create an enterprise technology stack that helps in automation and scalability and avoids the duplication of technology and processes. Hence, it is very important to speak and understand XOps when we discuss scalability in data analytics.

XOps enables data and analytics teams to operationalize their processes and automation from the very beginning rather than addressing this issue as an afterthought. Here, the term ‘operationalize’ refers to orchestrating processes in a way to meet measurable and defined goals that are aligned with business priorities.

Examples of successful scaling

Netflix, which started in 1997 as a DVD rental company, has now grown into one of the world’s most valuable firms with over 214 million subscribers. The pace and smoothness of its scalability are one for the books. Netflix has developed and invested in its big data and analytics tools to run a super successful business model.

Apart from Netflix, social media giant Twitter also deserves mention. As per a paper, two factors have helped Twitter scale up rapidly – using schemas to help data scientists understand petabyte-scale data stores and integrating several components into production workflows.

Companies like Netflix and Twitter have survived and thrived amid stiff competition because they scaled rapidly, sustainably, and responsibly: A good lesson for companies playing the long game.